I am now a scientific researcher in Tencent Hunyuan, exploring video generation in digital human domain. Before that, I had interned in Tencent AI Lab for two years, exploring 3D digital human/head reconstruction/editing related researches with Di Kang(康頔) and Linchao Bao(暴林超). And I also had interned in Interaction Intelligence Lab, Ant Research, for one year, working with Xuan Wang(王璇) and exploring how to reconstruct high-quality 3D human/head with low cost.

As for education experience, I received my Ph.D. degree from the Department of Computer Science and Technology, Tsinghua University (清华大学计算机科学与技术系), advised by Prof. Song-Hai Zhang (张松海) and Prof. Yu-Ping Wang(王瑀屏). The lab’s name is CSCG, created by Prof. Shimin Hu(胡事民). Before that, I achieved my bachelor’s degree at the School of Computer and Communication Engineering, University of Science and Technology Beijing (北京科技大学计算机与通信工程学院).

My research interest focuses on video generation, digital humans and computer vision, including 2D/3D digital human applications, image/video generative models, and SFT/RLHF techniques.

🔥 News

- 2025.07: I joined Tencent Hunyuan as a scientific researcher.

- 2025.07: 🎉 2 papers is accepted by ICCV 2025!

- 2025.03: 🎉 MeGA was accepted by CVPR 2025!

- 2024.07: I joined AntGroup as an intern.

- 2023.08: 🎉 Neural Point-based Volumetric Avatars was accepted by SIGRRAPH Asia 2023!

- 2023.07: 🎉 LoLep was accepted by ICCV 2023!

- 2022.07: I joined Tencent AI Lab as an intern.

- 2022.02: 🎉 MotionHint was accepted by ICRA 2022!

📝 Publications

He-Yi Sun, Cong Wang, Tian-Xing Xu, Jingwei Huang, Di Kang, Chunchao Guo, Song-Hai Zhang

- By proposing surf-GS for modeling global appearance as a texture image and vol-GS for high-fidelity rendering of non-Lambertian regions, SVG-Head supports not only high-quality renderings, but also real-time, fine-grained texture editing.

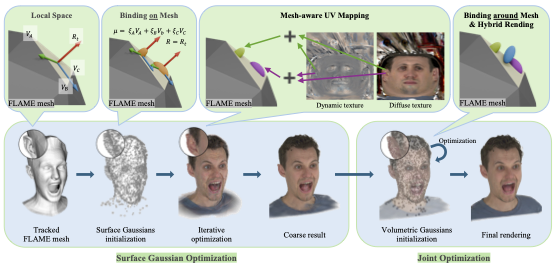

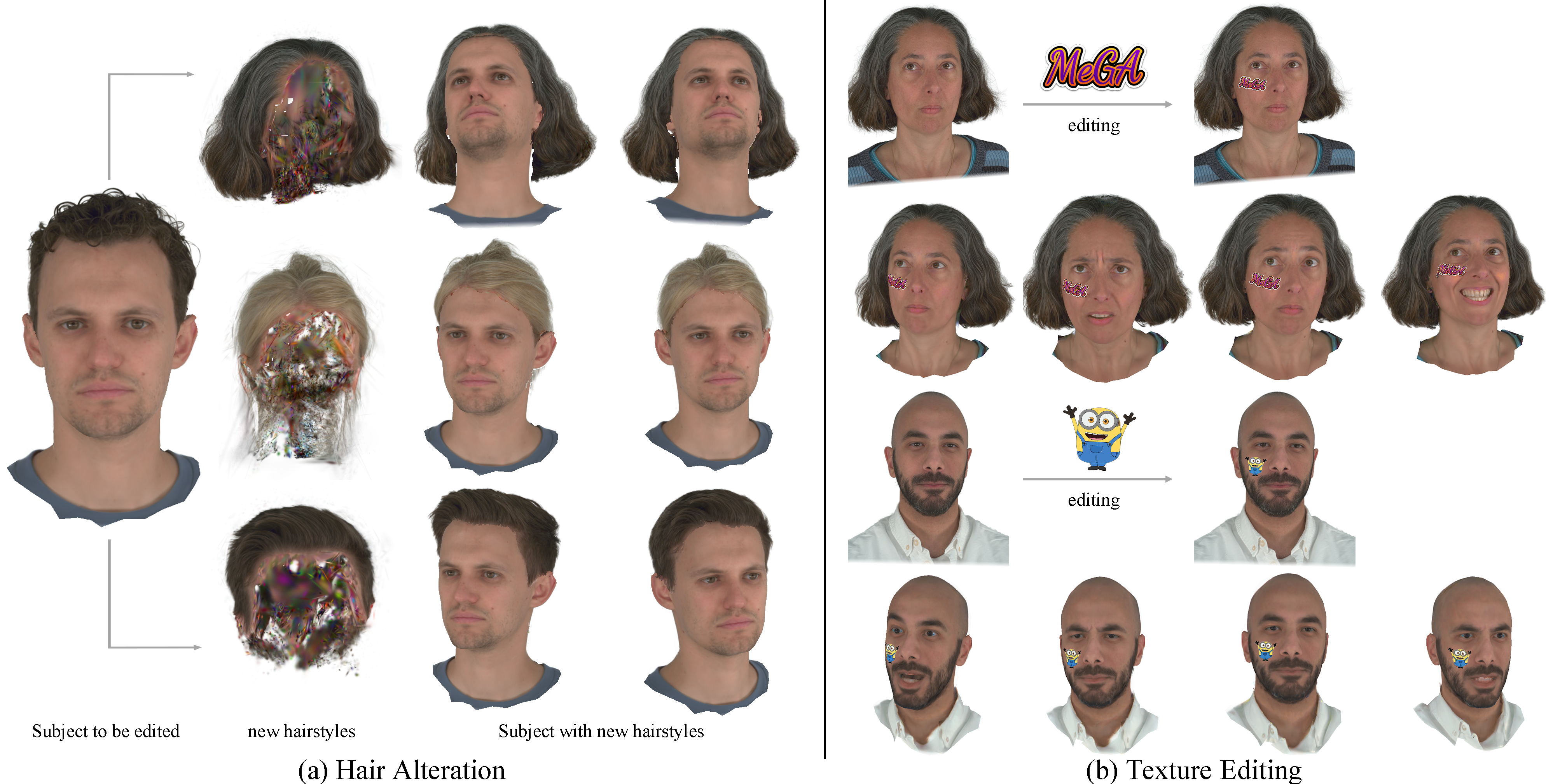

MeGA: Hybrid Mesh-Gaussian Head Avatar for High-Fidelity Rendering and Head Editing

Cong Wang, Di Kang, He-Yi Sun, Shen-Han Qian, Zi-Xuan Wang, Linchao Bao, Song-Hai Zhang

- By proposing the use of more appropriate representations for different parts of the human head and the corresponding hybrid rendering methods, we produce high-quality 3D human head avatar, and for the first time, support human head editing (i.e., texture editing and hair alteration).

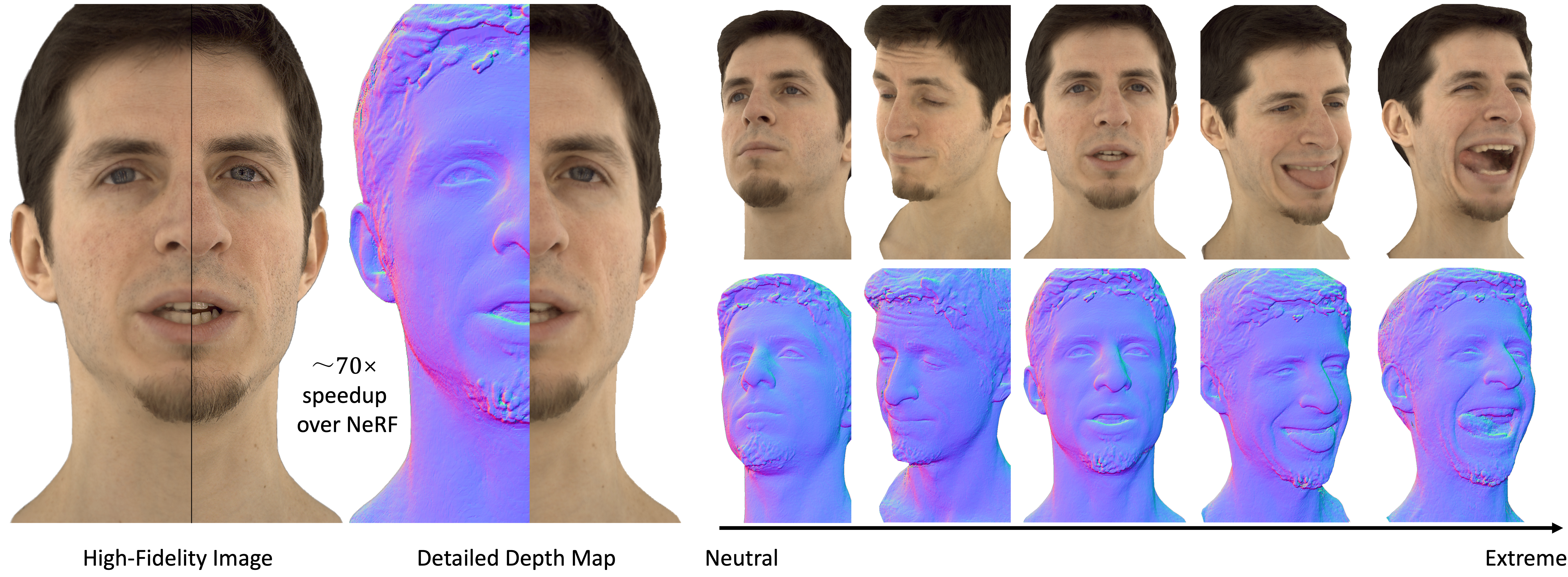

Cong Wang, Di Kang, Yan-Pei Cao, Linchao Bao, Ying Shan, Song-Hai Zhang

- We propose a new surface-guided neural point representation and the corresponding rendering acceleration method to greatly improve the rendering quality of eyes and mouth interiors in 3D digital human head modeling.

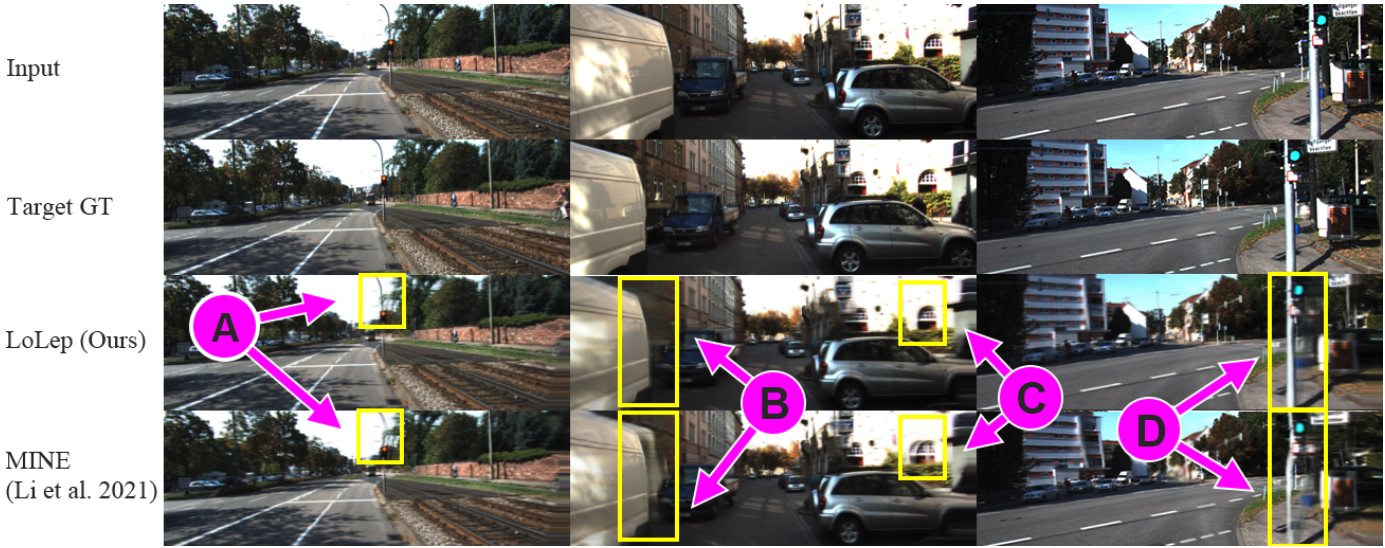

LoLep: Single-View View Synthesis with Locally-Learned Planes and Self-Attention Occlusion Inference

Cong Wang, Yu-Ping Wang, Dinesh Manocha

- We propose to use an NN Sampler for more certain and reasonable MPI plane locations and introduce a reprojection loss to facilitate sampler learning. Additionally, we propose the block self-attention mechanism to enhance the network’s inference ability for the occluded regions.

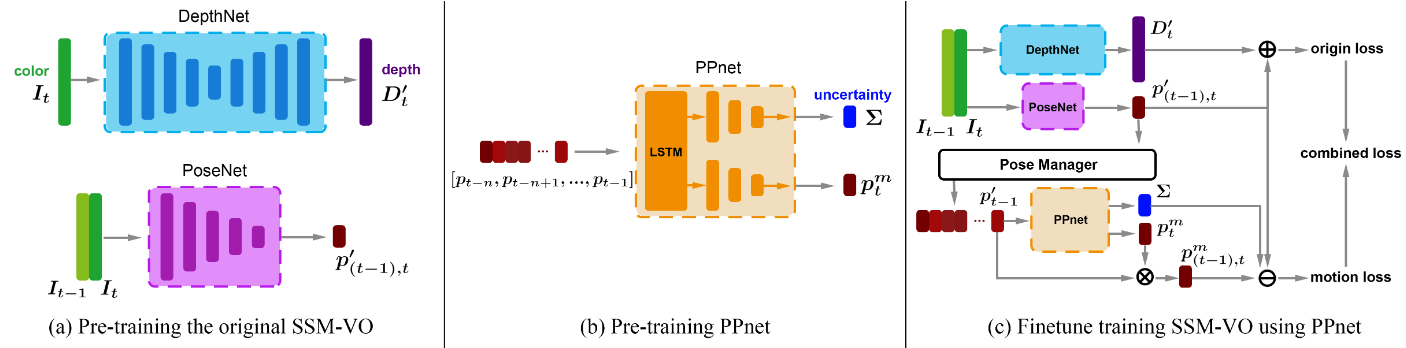

MotionHint: Self-Supervised Monocular Visual Odometry with Motion Constraints

Cong Wang, Yu-Ping Wang, Dinesh Manocha

- We introduce the motion prior of camera carriers (mainly unmanned vehicles) via a pre-trained PPNet, so that the predicted camera poses can be corrected according to the motion prior, yielding the state of art results.

- ORBBuf: A robust buffering method for remote visual SLAM, Yu-Ping Wang, Zi-xin Zou, Cong Wang, et al. IROS 2021

🎖 Honors and Awards

- 2024.11 Huawei Scholarship (¥20,000)

- 2024.07 2023 Tencent AI Lab Rhino-Bird Elite Talent, Excellence Award

- 2023.10 The Second Prize Scholarship (¥5,000)

- 2023.09 Longhu Scholarship (¥5,000)

- 2023.05 2023 Tencent AI Lab Rhino-Bird Elite Talent

- 2022.10 The Second Prize Scholarship (¥5,000)

- 2022.09 Longhu Scholarship (¥5,000)

- 2020.06 Excellent Graduate of Beijing (Top 5%)

- 2019.11 National Scholarship (¥8,000, 1/446)

- 2019.04 the Mathematical Contest in Modeling, Meritorious Winner (Top 4%)

- 2018.11 National Scholarship (¥8,000, 1/446)

- 2018.04 the Mathematical Contest in Modeling, Meritorious Winner (Top 4%)

- 2017.11 People’s Special Scholarship (¥5,000, 1/145)

- 2017.11 “Guan Zhi” Scholarship (¥10,000, 1/446)

📖 Educations

- 2020.09 - 2025.06, Ph.D. student, the Department of Computer Science and Technology, Tsinghua University, Beijing.

- 2016.09 - 2020.06, Undergraduate, the School of Computer and Communication Engineering, University of Science and Technology Beijing, Beijing.

💬 Invited Talks

- 2023.12, Oral Presentation for “Neural Point-based Volumetric Avatar: Surface-guided Neural Points for Efficient and Photorealistic Volumetric Head Avatar”, SIGGRAPH Asia 2023, Sydney, NSW, Australia.

- 2023.11, Show my paper, invited by Journal of Image and Graphics, bilibili video

- 2022.07, Show my paper, invited by BKUNYUN, bilibili video

- 2022.05, Oral Presentation for “MotionHint: Self-Supervised Monocular Visual Odometry with Motion Constraints”, ICRA 2022, Philadelphia, PA, USA.

💻 Work

- 2025.07 - now, Tencent Hunyuan, Beijing.

- 2025.05 - 2025.07, Taotian Group, Hangzhou.

- 2024.07 - 2025.05, AntGroup, Hangzhou.

- 2022.07 - 2024.07, Tencent AI Lab, Beijing.